Okay, time for my first post. This is my first project in Rust. I'm an amateur coder, so don't expect anything too mind-blowing here.

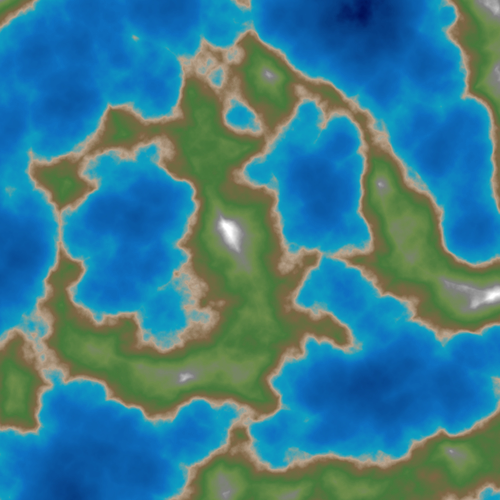

I played around with terrain generation a bit, but I got ahead of myself. Before I can start to really plan out the scope of this thing I need to see what my limits are regarding RAM and disk space usage. Dwarf Fortress' largest worlds are I believe somewhere around 196608x196608x256? tiles. That's an impressive number. If I were to store just a single byte for each of those tiles it would add up to over 9.2 TiB of data.

So obviously some kind of sparse data structure is going to be helpful here. B-trees seem like a good fit, and the Rust stdlib has something called BTreeMap. With a B-tree I can have a huge blank canvas, but only save the x,y,z coordinates of discovered or modified tiles.

After thinking a bit, I decided to limit map size to 65536x65536x256 tiles. It's plenty huge to get started with, and my coordinates of (u16,u16,u8) will take up less memory.

I put together a little test. I wanted to test how fast I could insert 2,000,000 triplets of (u16,u16,u8) into a B-tree, write it to disk, read it back from disk, and compare each value to make sure that I serialized and deserialized all the data correctly. For serialization I'll be using bincode.

Results for flat b-tree as XYZ: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 598.6441ms, maximum time = 659.3505ms, mean time = 616.20734ms, file size = 11.44 MiB

Well that doesn't seem terrible. 2 million inserts, serialize/deserialize, and 2 million compares on a B-tree in 663ms...and bincode even worked some magic to make the file nice and compact. I wonder though, would reordering the coordinates to z,x,y speed it up since I only have 256 Z levels, but 64,000 width/height?

I think I'll extend this test in the most ugly way possible and find out.

Results for flat b-tree as XYZ: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 607.8915ms, maximum time = 656.4575ms, mean time = 619.5944ms, file size = 11.44 MiB

Results for flat b-tree as ZXY: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 867.2752ms, maximum time = 900.4827ms, mean time = 881.3094ms, file size = 11.44 MiB

Well that's no good, it's slower. I can't help but think there must be a more efficient way to store these coordinates. If I nest the B-trees I can index individual B-trees with just u8.

My first attempt looks like this:

pub struct DeeplyNestedBTree<T> {

buf: BTreeMap<u8, Branch4<T>>,

}

struct Branch<T> {

pub buf: BTreeMap<u8, T>

}

struct Branch2<T> {

pub buf: BTreeMap<u8, Branch<T>>

}

struct Branch3<T> {

pub buf: BTreeMap<u8, Branch2<T>>

}

struct Branch4<T> {

pub buf: BTreeMap<u8, Branch3<T>>

}

It didn't go well. For every get and insert it has to traverse all the B-trees. Here are the test results:

Results for flat b-tree as XYZ: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 586.4466ms, maximum time = 639.7647ms, mean time = 602.92022ms, file size = 11.44 MiB

Results for flat b-tree as ZXY: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 850.5513ms, maximum time = 863.2367ms, mean time = 858.85032ms, file size = 11.44 MiB

Results for deeply nested btree: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 1.5296632s, maximum time = 1.5497243s, mean time = 1.5375108s, file size = 22.08 MiB

It's not only slower, but takes up a lot more memory. I had another idea. I could transform (u16,u16,u8) coordinates into a group of shallower nested B-trees. Here's what I came up with:

pub struct NestedBTree<T> {

x65535y65535: BTreeMap<(u8, u8), BTreeMap<(u8, u8, u8), T>>,

x65535y255: BTreeMap<(u8, u8), BTreeMap<(u8, u8, u8), T>>,

x255y65535: BTreeMap<(u8, u8), BTreeMap<(u8, u8, u8), T>>,

x255y255: BTreeMap<(u8, u8), BTreeMap<(u8, u8, u8), T>>,

}

impl<T> NestedBTree<T> {

pub fn new() -> NestedBTree<T> {

Self {

x255y255: BTreeMap::new(),

x255y65535: BTreeMap::new(),

x65535y255: BTreeMap::new(),

x65535y65535: BTreeMap::new(),

}

}

#[inline(always)]

pub fn get (&self, x:u16, y:u16, z: u8) -> Result<&T, &'static str> {

if x > 255 && y > 255 {

let child = match self.x65535y65535.get(&((x/256) as u8, (y/256) as u8)) {

None => return Err("Not found"),

Some(s) => s,

};

let child = match child.get(&((x%256) as u8, (y%256) as u8, z)) {

None => return Err("Not found"),

Some(s) => s,

};

return Ok(&child);

}

else if x > 255 {

let child = match self.x65535y255.get(&((x/256) as u8, y as u8)) {

None => return Err("Not found"),

Some(s) => s,

};

let child = match child.get(&((x%256) as u8, y as u8, z)) {

None => return Err("Not found"),

Some(s) => s,

};

return Ok(&child);

}

else if y > 255 {

let child = match self.x255y65535.get(&(x as u8, (y/256) as u8)) {

None => return Err("Not found"),

Some(s) => s,

};

let child = match child.get(&(x as u8, (y%256) as u8, z)) {

None => return Err("Not found"),

Some(s) => s,

};

return Ok(&child);

}

else {

let child = match self.x255y255.get(&(x as u8, y as u8)) {

None => return Err("Not found"),

Some(s) => s,

};

let child = match child.get(&(x as u8, y as u8, z)) {

None => return Err("Not found"),

Some(s) => s,

};

return Ok(&child);

}

}

#[inline(always)]

pub fn insert(&mut self, x:u16, y:u16, z: u8, value: T) {

if x > 255 && y > 255 {

if !self.x65535y65535.contains_key(&((x/256) as u8, (y/256) as u8)){

self.x65535y65535.insert(((x/256) as u8, (y/256) as u8), BTreeMap::new());

}

let child = self.x65535y65535.get_mut(&((x/256) as u8, (y/256) as u8)).unwrap();

child.insert(((x%256) as u8, (y%256) as u8, z), value);

}

else if x > 255 {

if !self.x65535y255.contains_key(&((x/256) as u8, y as u8)){

self.x65535y255.insert(((x/256) as u8, y as u8), BTreeMap::new());

}

let child = self.x65535y255.get_mut(&((x/256) as u8, y as u8)).unwrap();

child.insert(((x%256) as u8, y as u8, z), value);

}

else if y > 255 {

if !self.x255y65535.contains_key(&(x as u8, (y/256) as u8)){

self.x255y65535.insert((x as u8, (y/256) as u8), BTreeMap::new());

}

let child = self.x255y65535.get_mut(&(x as u8, (y/256) as u8)).unwrap();

child.insert((x as u8, (y%256) as u8, z), value);

}

else {

if !self.x255y255.contains_key(&(x as u8, y as u8)){

self.x255y255.insert((x as u8, y as u8), BTreeMap::new());

}

let child = self.x255y255.get_mut(&(x as u8, y as u8)).unwrap();

child.insert((x as u8, y as u8, z), value);

}

}

}

And the results are much better (it's the last test). Around 25% faster and a good bit smaller memory footprint too:

Results for flat b-tree as XYZ: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 590.74ms, maximum time = 673.3517ms, mean time = 615.34436ms, file size = 11.44 MiB

Results for flat b-tree as ZXY: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 857.0916ms, maximum time = 877.4087ms, mean time = 865.27806ms, file size = 11.44 MiB

Results for deeply nested btree: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 1.5320715s, maximum time = 1.5865624s, mean time = 1.54960012s, file size = 22.08 MiB

Results for nested btree: # of tests: 5, iterations = 2000000, width = 64000, serialize=true

minimum time = 432.0617ms, maximum time = 450.3567ms, mean time = 440.51756ms, file size = 7.78 MiB

I want to do one more test. I want to see what 100,000,000 bytes inserted into the different B-trees looks like:

x,y,z insert finished...23.9162098s elapsed

x,y,z write finished...2.5539319s elapsed

x,y,z read finished...9.7052168s elapsed

x,y,z compare finished...33.5086576s elapsed

Data is the same = true

total elapsed = 71.030024s, iterations = 100000000, dimenions = 64000 X 64000 X 256, serialize/deserialize = true

z,x,y insert finished...30.5735892s elapsed

z,x,y write finished...3.8273196s elapsed

z,x,y read finished...11.3962669s elapsed

z,x,y compare finished...46.5156209s elapsed

Data is the same = true

total elapsed = 94.0144163s, iterations = 100000000, dimenions = 256 X 64000 X 64000, serialize/deserialize = true

deeply nested insert finished...40.1103969s elapsed

deeply nested write finished...10.5372181s elapsed

deeply nested read finished...14.5991097s elapsed

deeply nested compare finished...48.1683471s elapsed

Data is the same = true

total elapsed = 127.0032545s, iterations = 100000000, dimenions = 64000 X 64000 X 256, serialize/deserialize = true

nested insert finished...16.0633079s elapsed

nested write finished...1.4942538s elapsed

nested read finished...10.3389582s elapsed

nested compare finished...17.84099s elapsed

Data is the same = true

total elapsed = 48.4802249s, iterations = 100000000, dimenions = 64000 X 64000 X 256, serialize/deserialize = true

Results for flat b-tree as XYZ: # of tests: 1, iterations = 100000000, width = 64000, serialize=true

minimum time = 71.030024s, maximum time = 71.030024s, mean time = 71.030024s, file size = 572.20 MiB

Results for flat b-tree as ZXY: # of tests: 1, iterations = 100000000, width = 64000, serialize=true

minimum time = 94.0144163s, maximum time = 94.0144163s, mean time = 94.0144163s, file size = 572.20 MiB

Results for deeply nested btree: # of tests: 1, iterations = 100000000, width = 64000, serialize=true

minimum time = 127.0032545s, maximum time = 127.0032545s, mean time = 127.0032545s, file size = 1053.44 MiB

Results for nested btree: # of tests: 1, iterations = 100000000, width = 64000, serialize=true

minimum time = 48.4802249s, maximum time = 48.4802249s, mean time = 48.4802249s, file size = 382.73 MiB

Not bad. It's a good bit faster on all metrics, except for deserializing, which was just a tad slower than the flat B-tree. Memory footprint is also much improved. Now I can move on to terrain generation.

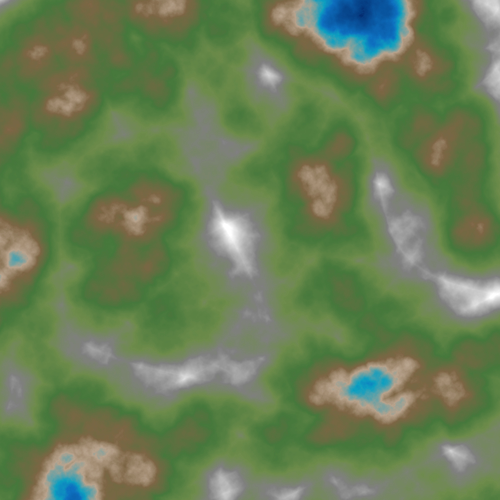

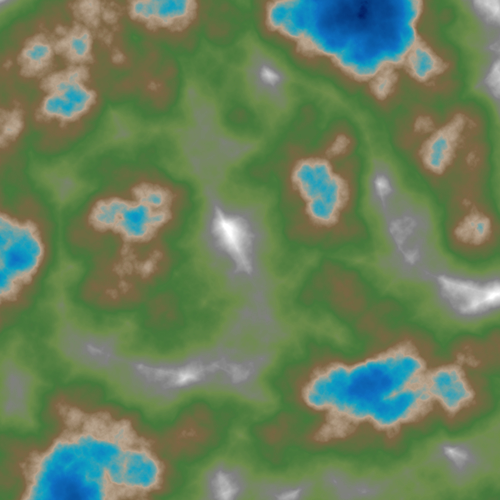

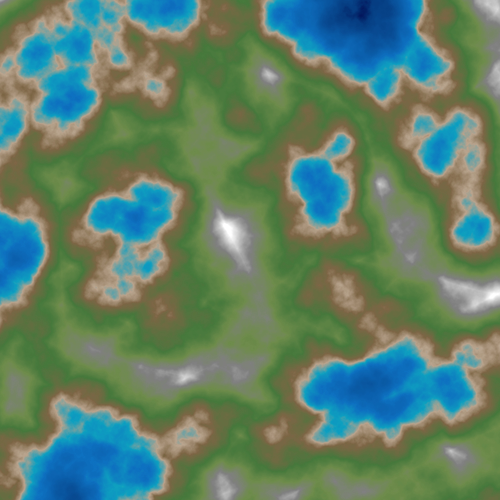

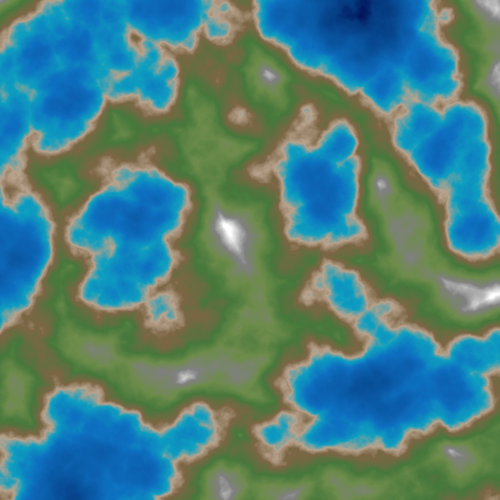

Source code from this post is available at btree_test.